Storytelling focuses on the act of writing reports for educational or entertainment purposes to depict a narrative that studies the world. Dating back 30,000 years, storytelling allows writers to showcase their personal experiences among diverse communities around the world. The origins of sharing written pieces of work rose in popularity after the discovery of cave drawings in Lascaux and Chavaux, France which portrayed visually advanced images. Generations later—in the 21st century—expressing a person’s internal thoughts launches through online newspapers, journals and publishing websites all over the internet. To do this, a plethora of journalists produce their work utilizing forms of Artificial Intelligence (AI) which may conquer the future of newsrooms and the journalism field. Educational classrooms, now flooded with AI-written papers, contradict a student’s ability to produce his/her own work. This leads to the questioning of a student’s written work which now beams greater than in past years, leaving various classrooms suspicious of the damaging impacts of AI.

“In Fall 2023, I had no idea how difficult it was going to be to deal with AI in the classroom. I trusted that students were going to write their own papers, and I also trusted that using Turnitin.com was enough protection. By protection, I mean for them. I would hate for students to do all this work throughout the year, turn the final 5000-word paper to College Board, and then get a zero because they used AI,” AP Research teacher Dr. Elizabeth Jamison said.

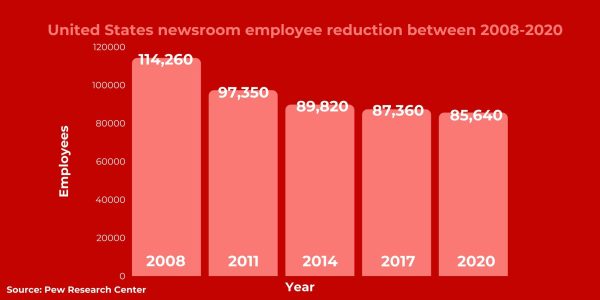

With the use of news platforms on the internet, consumers refrained from purchasing printed newspapers and now have grown accustomed to online memberships including digital subscriptions such as New York Times or The Washington Post. Since 2008, newsroom employment has decreased by 57% while digital native newsroom employees rose 144% from about 7,400 workers in 2008 to about 18,000 in 2020. While the increase in digital employees impacted the decrease in newsroom employment, various well-known United States newspapers experienced layoffs, leading governments to provide nearly 2,800 newspaper companies with federal aid. The U.S. supplied these companies with funds from the Paycheck Protection Program to keep their staff employed; specifically, during the COVID-19 pandemic in 2020.

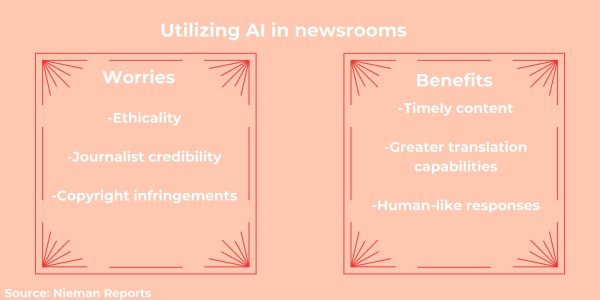

With the evolving switch to online newspapers, newer innovations such as ChatGPT and other AI programs changed the landscape for journalists. Writers now utilize digital platforms such as Canva’s Magic Design which help them with the creative aspect of journalism. Canva’s new AI generator tool allows journalists to design elements and cover photos which creates custom, attention-grabbing work in seconds. On the other hand, ChatGPT—an AI program that provides humanlike answers to given prompts—brings the potential to assist journalists by automating the process of writing and content creation. This program permits news organizations to produce a greater scope of timely content and to provide readers with a quick overview of daily news.

Opposing the helpfulness of ChatGPT, this AI-generated program triggers the production of false content and inaccuracy within the journalism field. While automatic storytelling may reach high expectations when generating stories, AI can craft inaccurate news depending on the level of understanding within the model’s capabilities. With the lack of human touch amid a piece of work, journalists risk losing their style of writing and overall journalistic ethics. For example, CNET, an American media website, began publishing fully AI-written stories under an unspecified AI engine which sparked outrage among readers. These stories received harsh criticism because of the notoriously poor accuracy and errors throughout the articles. CNET also received backlash for the AI-written stories because Connie Guglielmo, past editor-in-chief, promised that every story published under the program would have passed through the editorial process before publishing. However, various of the generated articles obtained a series of senseless errors, leaving critics to assume that no human journalist touched the story, targeting the editors’ journalistic abilities.

“We are barely seeing how AI is going to impact journalism right now, but we do know that there are sites out there now that are 100% powered by AI. They are known to have hallucinations and to publish pieces of work that are not factual and it is also known to publish works that are not transformative so you get into copyright violations. So there are issues where AI is pulling its data from,” Student Press Law Center executive director Gary Green said.

As writers combat the struggles of AI, overall journalistic ethics impact their credibility. While various AI algorithms allow writers to see the number of readers their stories receive, other algorithms enhance their content or reach the ability to write their timely stories utilizing keywords. Not only can algorithms produce stories but also visual content. Creating content with platforms such as Canva leads to stereotypical images which AI has yet to overcome.

“The Washington Post did a story looking at how AI models generated images and so they went into a couple of AI generators and gave them terms to generate an image. They asked for a professional at a desk and every single image was of a white male and then they asked it to generate an image of a Latina woman and the images were so sexually graphic that they wouldn’t even publish the images and that’s because these AI models are training on unedited, uncurated data sets,” principal lecturer of journalism at the University of Georgia Mark Johnson said.

While AI continues to develop, the AI algorithm cannot yet express diversity within its data. Through the digitalization of AI images, various bias forms become present because of the limited knowledge AI captures. Selection bias frequently appears with the use of AI image algorithms because the data used to train the algorithms does not represent the reality the image means to model. For example, the altered automated images of today’s conflict in Gaza further the idea of incomplete data and biased sampling.

On the other hand, a multitude of images showcasing the war in Gaza become digitized to examine the reality of children living in horrendous situations. For example, an image of two boys wearing matching pajamas and lying in a puddle of mud has experienced AI mistakes. These vivid images capture subtle flaws such as disfigured physical characteristics and perfect uniformity which seems unrealistic. For example, the images detailed the children with reflective skin textures, uneven skin tones, fused body parts and missing body features. Photojournalists continue to combat the struggle of misinformative images by engaging in society to capture the raw footage of worldwide issues, leading them to risk their safety. Ultimately, AI threatens the credibility of photojournalists who put their lives on the line to set the agenda and report the truth.

“With journalism at large, more and more news organizations have been purchased by venture capital firms. These venture capital firms are going to be pushing for more content at a lower cost and AI can generate that but the only problem is that the content that it is producing isn’t ethically collected, it obtains copyright infringements and there’s a fairly significant bias problem,” Johnson said.

To continue, the issue of journalistic credibility has risen significantly since the introduction of Generative Adversarial Networks, in 2014, and the creation of ChatGPT in 2017, which became prominent within the rise of journalist deaths. Due to the accusations toward journalists stating they began reporting “fake news,” writers ventured out into the war field in Gaza to prove their journalistic abilities. Under the outburst of human journalists on-site during the war in Gaza, 97 of these reporters have brutally perished.

Among these 97 journalists, 92 identified as Palestinians, two as Israelis and three as Lebanese. With any high casualty news coverage, the current war becomes unprecedented for the rate at which journalists face their deaths. Unlike the ease of utilizing AI to produce images or automated stories based on sample data, the journalists who face the reality of worldly issues produce true, educational articles to inform society of the struggles of millions.

Although generated images overwhelm the world’s state of affairs, the upbringing of AI led to an increase in efficiency throughout news organizations. Now, AI software markets continue to fuel translation industries, making it easier for journalists to communicate with groups worldwide. While even the utmost AI tools cannot perform autonomously, they help translation professionals bridge gaps between native speakers during interviews for reporters to utilize when writing their stories. Due to the rapidity of AI programs, news platforms have reached soaring productivity gains. For example, AI allowed for higher reports of dynamic paywalls, leading to additional subscribed members to platforms such as the Atlanta Journal-Constitution and the Marietta Daily Journal.

Considering how AI comes in myriad forms, newsrooms continue to experiment with the software to constantly keep sites up to date with emerging news content. AI has changed the realm for journalists because of the legal and ethical challenges they now carry. Although high-profile news outlets attain the majority of issues regarding AI’s impact within newsrooms, student-run news websites such as The Chant face the programs regularly. With the creative use of Canva, reporters become influenced to utilize its AI generator to create article promotions as well as advertisements to keep the site up and running. While the future of journalists remains steadily unclear, the impact of AI platforms will allow writers to embrace the implications and disruptions of the dawning software.